SLAM++: Simultaneous Localisation and Mapping at the Level of Objects

We present the major advantages of a new 'object oriented' 3D SLAM paradigm, which takes full advantage in the loop of prior knowledge that many scenes consist of repeated, domain-specific objects and structures. As a hand-held depth camera browses a cluttered scene, real-time 3D object recognition and tracking provides 6DoF camera-object constraints which feed into an explicit graph of objects, continually refined by efficient pose-graph optimisation. This offers the descriptive and predictive power of SLAM systems which perform dense surface reconstruction, but with a huge representation compression. The object graph enables predictions for accurate ICP-based camera to model tracking at each live frame, and efficient active search for new objects in currently undescribed image regions. We demonstrate real-time incremental SLAM in large, cluttered environments, including loop closure, relocalisation and the detection of moved objects, and of course the generation of an object level scene description with the potential to enable interaction.

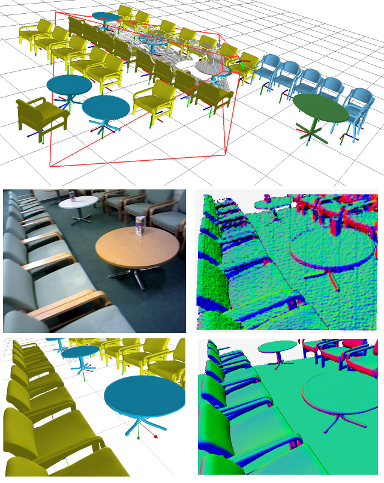

(top) A cluttered 3D scene is efficiently tracked and mapped in real-time directly at the object level. (left) A live view at the current camera pose and the synthetic rendered

objects. (right) We contrast a raw depth camera normal map with the corresponding high quality prediction from our object graph, used both for camera tracking and for masking object search.

(top) A cluttered 3D scene is efficiently tracked and mapped in real-time directly at the object level. (left) A live view at the current camera pose and the synthetic rendered

objects. (right) We contrast a raw depth camera normal map with the corresponding high quality prediction from our object graph, used both for camera tracking and for masking object search.

Context-aware augmented reality: virtual characters navigate the mapped scene and automatically find sitting places.

Context-aware augmented reality: virtual characters navigate the mapped scene and automatically find sitting places.